Avatar’s Facial Expressions with “Manpu (Comic Symbols)” by Using Multiple Biometric Information

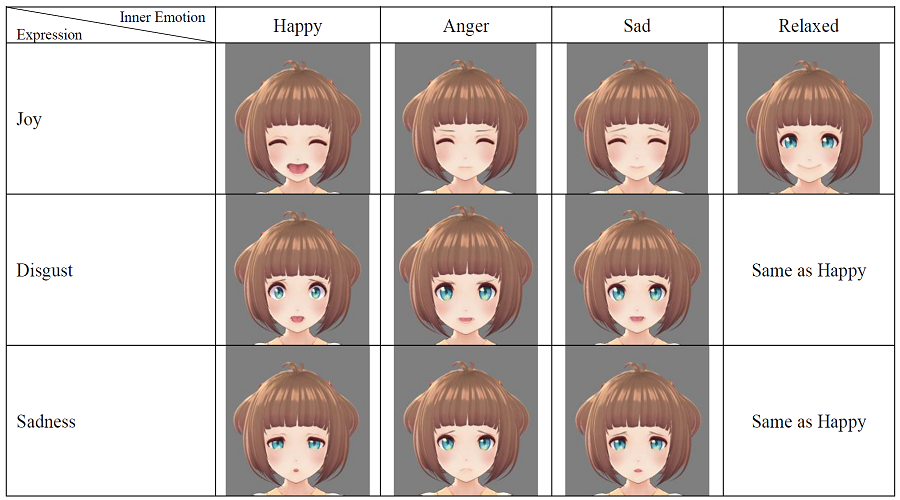

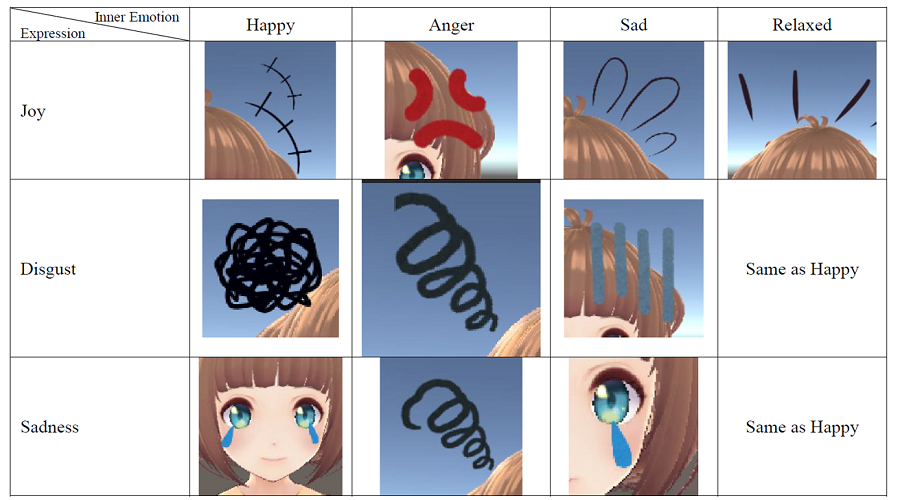

It is a challenging issue to generate avatar’s natural facial expressions from user’s facial images. One of the most difficult problems is to analyze user’s facial images, and estimate his emotions. We have already proposed a method that estimated user’s emotions combining both analysis on user’s facial images taken from a video camera and measuring heart rate information, pNN50. Here, pNN50 is a percent of difference between adjacent heartbeat intervals greater than 50 ms calculated from heart rate information. Each user’s emotion was estimated as either of ‘Joy’, ‘Disgust’, ‘Sadness’, or ‘Anger’. Our previous method estimated user’s emotions and generated appropriately avatar’s face images. However, further improvement of measurement of each emotion is needed for the natural facial expressions of the avatars. In this paper, we propose a new method which measures the skin conductance level (SCL) by using a biometric sensor. We focus on “Russell’s circumplex model of affect”, in which each emotion is described as a 2D vector of the ‘valence’ and ‘arousal’. Our method calculates the ‘valence’ by measuring pNN50, and the ‘arousal’ from the SCL. The four quadrants of “Russell’s circumplex model of affect” correspond to ‘Joy’, ‘Disgust’, ‘Sadness’, and ‘Anger’, respectively. Based on results of multiple biological information, i.e., pNN50 and SCL, the proposed method estimates the accuracy of emotions and generates the avatar’s natural facial expressions by adding comic symbols (called “Manpu”) successfully and appropriately. “Manpu” can emphasize emotions. By using “Manpu”, human emotions can be more easily communicated.

Images